What can tech learn from sex workers?

Sexual Ethics, Tech Design & Decoding Stigma

By Zahra Stardust, Gabriella Garcia and Chibundo Egwuatu

With thanks to Livia Foldes & Danielle Blunt. Images by Decoding Stigma.

This article first appeared on Medium as part of Harvard’s Berkman Klein Center Collection. You can support the original post here.

What would tech look if it was designed by sex workers? What values would be embedded into the design?

A new collective, Decoding Stigma, is hoping to find the answer by bridging the gap between sex work, technology, and academia. The goals are twofold: to prioritize sexual autonomy as a necessary ethics question for researchers and technologists and to design a liberatory future in which sexually stigmatized voices are amplified and celebrated.

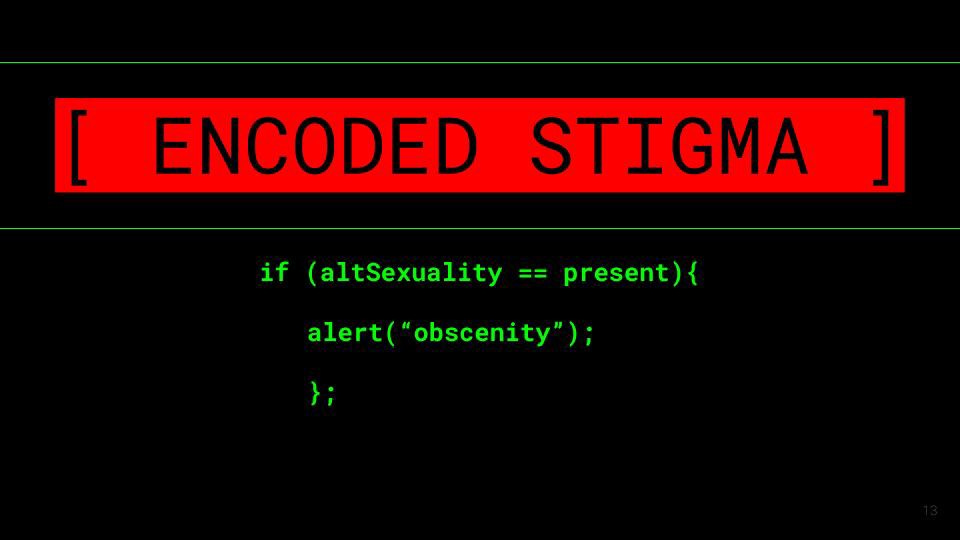

Decoding Stigma is the continuation of research presented by Gabriella Garcia at the Interactive Telecommunications Program at New York University. Garcia’s thesis, Shift Control, End Delete: Encoded Stigma, which she explains in detail here, explores how whorephobia becomes encoded in tech design, despite the historically co-constitutive relationship between sexual labor and the development of digital media.

The distribution of sexual material fiscally supported the historical development of communications technology, from the emergence of photography, home video, to even cable TV as the sexual revolution of the 1970s collided with more affordable means of film production and the birth of public-access television. Money made in the erotic market of 1980s proto-internet bulletin board systems (BBSs) literally paid for the material infrastructure that paved the path toward the world wide web before mainstream consumer adoption could even be taken into consideration.

As necessary innovators, sex workers are consistently early adopters of new technologies, designing, coding, building, and using websites and cryptocurrencies to advertise. In our time of internet ubiquity, sex workers often build up the commercial bases of platforms, populating content and increasing their size and commercial viability, only to later be excised and treated as collateral damage when those same platforms introduce policies to remove sex entirely.

Now, overarching policies that prohibit sexual communication, expression, and solicitation are commonplace. While some have responded to legislation such as FOSTA/SESTA that prohibits the promotion or facilitation of prostitution, many of these policies go far beyond what is legally required. When Apple, the first company to “put the internet in everyone’s pocket,” prohibited explicit content under its “Safety” conditions for third-party app developers, it fundamentally equated erotic material with torture, bigotry, and physical harm. As growing numbers of people are accessing their news and information via mobile (and thus via app), content must increasingly pass Apple’s Terms and Conditions as a point of market viability.

With algorithms to detect sexual content by picking up particular words, emojis, and hashtags and recognition software that scans for nudity, body parts, and skin patches, sex is under increasing surveillance. And yet algorithms are notoriously poor at understanding sex in context. Algorithmic bias and regulatory overreach disproportionately affect sex workers, even where the activities may be lawful.

With a lack of forums to openly advertise, share safety and screening tips, to discuss harm reduction materials or simply to find community, these policies can put sex workers in danger and have a chilling effect on the kinds of sexualities that are visible and critical sexual conversations that are speakable.

Algorithmic profiling has resulted in account shutdowns and shadowbanning of sex worker activists. Malicious flagging is also employed as a user strategy to deplatform and displace sex workers. Suspensions, bans, digital gentrification, and lack of access put sex workers in precarious positions and acts as barriers to participation in online space and digital economies, posing a threat to our livelihoods. Barred from using marketing tools like other workers, financial discrimination is rife, where payment processes ban sex workers entirely or deemed sex work at ‘high risk’ of fraud and chargebacks.

At the same time as this erasure, surveillance technologies are capturing sex workers’ data and misusing it. Legal name policies, requirements to upload photographic identification, the use of facial recognition software by payment processors, and social media functions that ‘out’ sex workers to clients all heighten risks for sex workers. The mining, sharing, and sale of data pose risks for sex workers being detected by law enforcement or facing increased immigration scrutiny, detention, or deportation.

Early tech exploitation of sex workers as internet infrastructure builders has now been surpassed by the development of carceral technologies to monitor sex worker behaviour. To Big Tech, the sex worker is as indispensable as they are disposable.

It’s with all this in mind that Decoding Stigma formed. Joining up with Livia Foldes of Parsons School of Design at the New School and Danielle Blunt of autonomous collective Hacking//Hustling as the group’s core infrastructure, Decoding Stigma have mobilised a group of critical sex thinkers — an interdisciplinary, cross-institutional mix of folk across tech, design, law, public health, gender studies, computer science, anthropology, and clinical social work — to deconstruct and re-generate the relationship between sex and tech.

Part of this agenda involves cultivating an ongoing open-source syllabus that serves as a ‘living learning hub of readings, resources, citable research, articles, and teaching toolkits’, for which the group plans to hold a live edit-a-thon.

Since its inception this Fall, Decoding Stigma has also been participating in workshops on data feminism and equitable participatory design and presenting to students as part of Melanie Hoff’s Cybernetics of Sex class, which explores what automatic control systems teach us about sex.

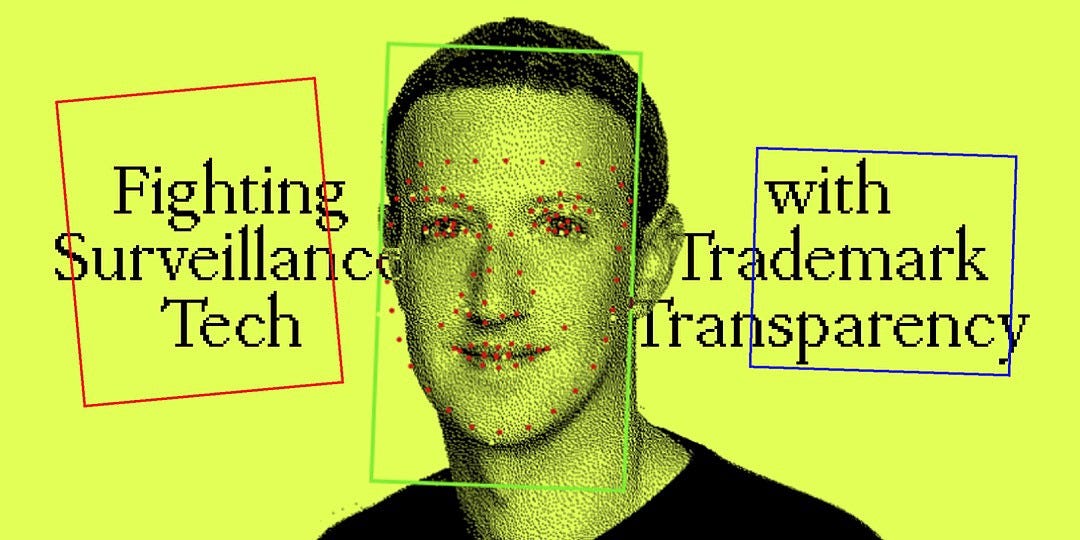

In November, the group held its first interactive workshop ‘Fighting Surveillance Tech with Trademark Transparency’ in collaboration with Amanda Levendowski and Kendra Albert, which explored how sex workers could search trademark disclosure databases to identify the development of new surveillance technologies that could threaten sex worker privacy, safety, and livelihoods.

In December, Decoding Stigma will host a workshop with Sasha Costanza-Chock of TransFeministTech and Joana Varon of Coding Rights for its first “Freedom to F*cking Dream” workshop, which will invite sex workers and allies to envision a future where technology is built by sex workers.

This will be a jumping-off point for a series of conversations led by Chibundo Egwuatu, whose doctoral research centers black sex worker activism, de/criminalization, and liberation. Because of the global and growing nature of displacement, exclusion, and disappearance of sex workers from digital space, these conversations will connect activists working and organizing around the futurity of tech and sex work across the digital divide and across borders.

These are just some of many steps Decoding Stigma is taking towards achieving a future of tech where sex workers can see ourselves, each other, and our communities thrive.

Taking a sex worker lens to tech is not simply about involving more sex workers in tech design, although the infiltration of sex workers into this space will no doubt shift the kinds of technologies that are imagined and built. These are fundamental questions about ethical design, participation, access, privacy, surveillance, violence, and re-visioning new worlds.

What would tech look like if it was designed by sex workers? Stay tuned and you might just find out.

To donate finances, skill-sets or other resources, propose a collaboration or workshop, or simply to join the conversation, visit Decoding Stigma.